With streaming now a core part of daily entertainment routines for viewers worldwide, the demand for over-the-top (OTT) video services continues to soar. The surge in viewership escalates expectations, pushing service providers to prioritize exceptional user experiences alongside high-quality content. The blend of engaging content, ease of access, and seamless viewing experiences further fuels demand for OTT platforms.

Such increasing demand places substantial pressure on various elements of the video delivery ecosystem. The impact on these components varies, influenced by factors including the system’s architecture, user behavior, and the underlying business model. Inherently complex, OTT video infrastructures are shaped by a myriad of considerations: commercial partnerships, historical service decisions, regulatory and security mandates, and even the dynamics within organizations themselves.

This complexity often results in significant performance demands on Digital Rights Management (DRM) systems, which are a crucial element for content security. While effective capacity management and cloud scalability can generally meet these demands, there are instances when these measures alone are insufficient. The risk of service disruptions, particularly during high-profile media events, underscores the importance of strategic foresight and planning to mitigate potential interruptions.

Moreover, the pursuit of robust, reliable service delivery must also consider economic and environmental sustainability. For video service providers, the question arises: Why maintain a fleet of 20 servers operating at full capacity around the clock when a more streamlined setup could achieve the same outcomes? Adopting more sustainable practices not only benefits the bottom line but also aligns with broader environmental objectives, making it a critical consideration for forward-thinking companies in the OTT space.

DRM system stress points and consumer impact

DRM systems play a critical role on OTT video platforms by encrypting content to enforce appropriate usage restrictions and access control. Ideally, these security measures operate transparently without affecting the end user’s experience. Nonetheless, DRM operations are vital yet largely invisible to consumers, focusing primarily on content protection rather than end-user functionality.

The process of obtaining video keys is often linear and central to the playback experience, placing DRM services squarely on the critical path. These services must perform several operations swiftly: validate user requests, retrieve and encrypt content keys, and enforce security protocols. Each operation, while efficiently processed, still consumes precious time and computational resources.

Issues arise when large numbers of users initiate content streams simultaneously, causing an abrupt spike in demand. This surge can lead to a deluge of requests, potentially overwhelming the DRM system’s capacity and resulting in playback failures. Moreover, such interruptions often prompt viewers to repeatedly attempt access, exacerbating the problem with additional load and affecting even more users.

DRM requests throughput vs concurrency of streams

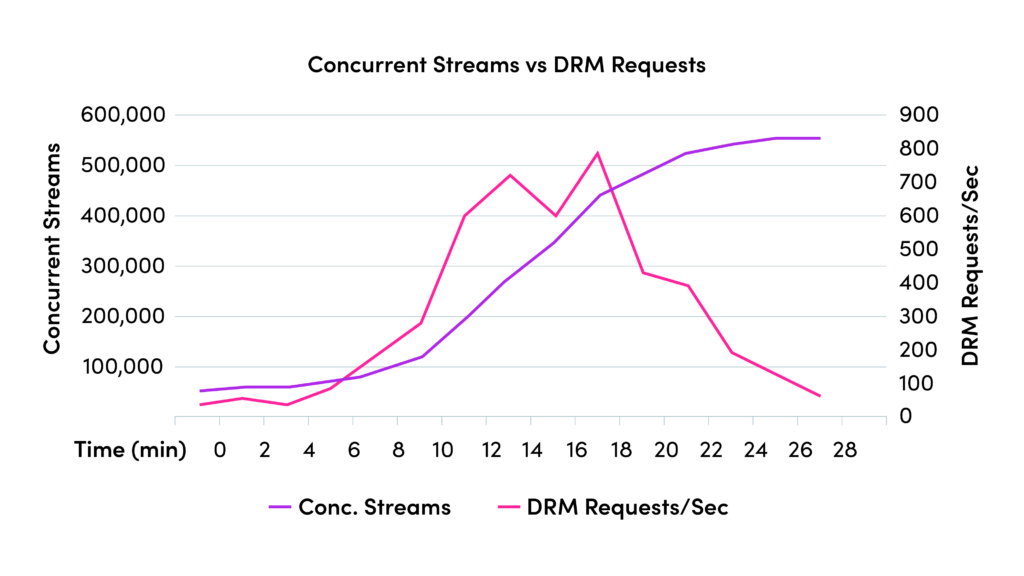

Clarifying the distinction between the throughput of DRM requests and the concurrency of content streams is pivotal to addressing system capacity issues.

Typically, a device will communicate with DRM services primarily at the onset of video playback to obtain the necessary decryption key. Once playback begins, the need to interact with DRM services diminishes significantly, as there is seldom a requirement to acquire a new key or renew permissions for the current key. Consequently, it’s conceivable to have millions of viewers concurrently streaming content, with sporadic demands placed on the DRM system.

This conceptual differentiation is crucial because it affects how infrastructure is scaled and managed. The accompanying graphic illustrates a scenario where DRM request throughput peaks at below 800 requests per second, while concurrent streams soar to over 500,000.

If a system is not able to scale above the required throughput of requests, consumers might be impacted.

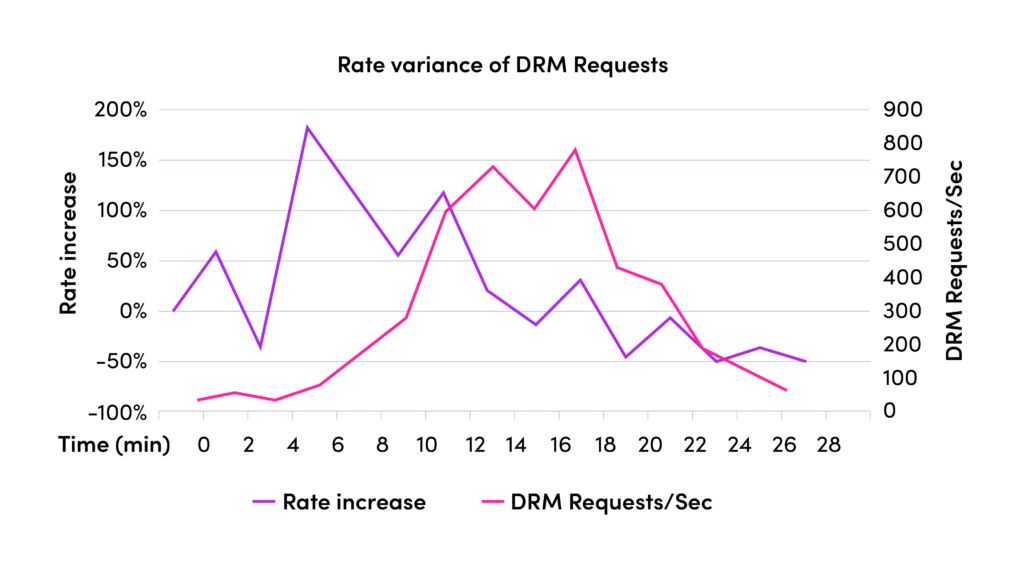

The rate at which DRM requests increase is a critical performance metric. It represents the variance in the volume of requests over specific intervals. For instance, the graph below shows a moment when the rate of DRM requests doubles in a short timeframe.

It is crucial to recognize that live events pose a significantly greater challenge in terms of DRM throughput demand compared to Video on Demand (VoD) services. While VoD consumption may exhibit peaks during certain hours, it lacks the synchronized surge typically associated with live events, resulting in a more gradual increase in DRM requests.

Understanding these dynamics is essential for designing systems capable of delivering uninterrupted service, particularly during high-traffic events, and ensuring a seamless viewing experience for all users.

Strategies for reducing DRM services stress

Identifying and implementing optimizations within DRM services is not just about bolstering security; it’s about enhancing the overall user experience, driving down operational costs, and reducing the environmental footprint.

The following section explores a range of strategies designed to alleviate stress on DRM services, ensuring that they remain robust and responsive, particularly under the high-load conditions characteristic of peak streaming periods.

Use of caching mechanisms in DRM

Caching is a pivotal strategy for managing the load on DRM systems during high-demand events. By retaining a decryption key in the video application’s cache for the duration of a significant event, such as a soccer match, we can substantially reduce the volume of key requests generated by viewers frequently tuning in and out. This behavior is typical during major live broadcasts, where viewers may momentarily switch channels only to return moments later.

The tendency for viewers to intermittently leave and rejoin a live event translates to a predictable pattern of DRM key requests. If these keys are cached after their initial use, most returning viewers can seamlessly resume playback without necessitating a new DRM handshake, minimizing unnecessary DRM traffic while also ensuring a smoother viewing experience.

Moreover, effective caching can maintain key availability even with a limited cache size, given the high probability of reuse within the typical timeframe of a live event. This method proves to be a crucial optimization, easing the burden on DRM services and aligning with the goal of delivering uninterrupted, high-quality streaming to consumers.

Implementing the principle of proportionality

The principle of proportionality in security postulates that the level of controls and protections should correspond to the value of the assets and the intensity of potential threats. For media and OTT platforms, this translates to deploying the most stringent security measures for high-value content, such as premium live broadcasts and sought-after on-demand assets, while adopting a more lenient stance for content of lower value.

Content considered to be of lower value might include live channels that are widely available as part of basic OTT service packages or those broadcasted free-to-air. For such assets, rigorous DRM may be unnecessary and can lead to inefficiencies.

To effectively apply the principle of proportionality, OTT services can consider the following tactics:

- Key sharing across multiple channels: By using a common key for several live channels, the need to obtain a new key with each channel switch is eliminated. This approach streamlines viewers’ experience by reducing key acquisition overhead.

- Extending key lifespan: For channels included in basic service packages, prolonging the validity of content keys—from several hours to potentially days—minimizes the frequency of key renewal requests.

Implementing these methods not only reduces the DRM system load but also enhances user satisfaction by facilitating quicker channel switching, thus removing potential delays caused by DRM processes.

Pre-fetching DRM keys to streamline access

The strategy of pre-fetching DRM keys addresses the potential bottleneck that occurs when a multitude of viewers simultaneously initiate a stream at the start of a major event. By enabling the application to pre-load DRM keys in the background ahead of an anticipated surge, the initial demand on the DRM system can be substantially reduced.

For devices such as set-top boxes, this approach can be further optimized by pre-loading keys for adjacent channels. This foresight significantly trims the delay commonly experienced when viewers switch channels, thereby offering a smoother, more engaging user experience.

It must be acknowledged that pre-fetching DRM keys involves a complex interplay of system components and, while not universally advantageous, provides specific benefits that are most notable in scenarios where rapid video start-up is crucial. This preemptive measure, when deployed judiciously, can be an effective component of a broader strategy to enhance performance on OTT streaming platforms, particularly during peak viewership events.

Mitigate credential or token authentication sharing

Credential (and token authentication) sharing not only translates into revenue loss due to subscriptions or payments being avoided but also increases operational costs due to a higher demand for streams for a lower predicted number of users.

Credential sharing, if not managed, can lead to incorrect capacity management and systems being flooded with unexpected requests. Having a mechanism to avoid this type of piracy is important for a sustainable service.

Prevent retry floods

Unpredictability is also an inherent challenge. A robust strategy must include generic failsafes to cushion the system against unforeseen disruptions. One common issue is the ‘retry flood’ phenomenon, where a service interruption prompts users to repeatedly attempt to play a video, resulting in a surge of requests that can overwhelm the DRM system, mirroring a Distributed Denial-of-Service (DDoS) attack.

To counteract this, previously discussed strategies can significantly reduce the overall impact. However, an additional layer of defense is the integration of intelligent retry logic within the video application itself. Such logic can delay subsequent playback attempts in the event of an error, spacing out the retries and thus mitigating a potential flood of requests.

Coordination with third parties

Effective communication in advance with third-party services involved in the OTT streaming ecosystem is crucial for managing sudden increases in demand. Ensuring that all stakeholders are prepared for potential spikes in viewer activity can enhance the responsiveness and reliability of the service.

Summary

The escalating demand for OTT video services presents a complex set of challenges for service providers, particularly in managing DRM system efficiency and maintaining a high-quality user experience. This article advocates for a comprehensive strategy encompassing caching, proportionate security measures, key pre-fetching, combating credential sharing, preventing retry floods, and enhanced coordination with third-party services.

Implementing any of these recommendations will not only help address the immediate challenges posed by increased demand but also pave the way for more sustainable, efficient, and user-centric OTT video streaming services. Through proactive management, OTT providers can ensure their platforms remain competitive and continue to meet the evolving needs of their audiences.